Show the code

name_age = {}

for i in range(3):

name = input("Name? ")

age = int(input("Age? "))

name_age[name] = age

name_choice = input("Name to find? ")

print(name_age[name_choice])Naomi Ceder

Feb, 2025

In the chapters that follow, I show you the essentials of Python. I start from the absolute basics of what makes a Python program and move through Python’s built-in data types and control structures, as well as defining functions and using modules. The last chapter of this part moves on to show you how to write standalone Python programs, manipulate files, handle errors, and use classes.

This chapter describes the absolute basics in Python: how to use assignments and expressions, how to use numbers and strings, how to indicate comments in code, and so forth. It starts with a discussion of how Python block structures its code, which differs from every other major language.

Python differs from most other programming languages because it uses whitespace and indentation to determine block structure (that is, to determine what constitutes the body of a loop, the else clause of a conditional, and so on). Most languages use braces of some sort to do this. The following is C code that calculates the factorial of 9, leaving the result in the variable r:

The braces delimit the body of the while loop, the code that is executed with each repetition of the loop. The code is usually indented more or less as shown, to make clear what’s going on, but it could also be written as follows:

/* And this is C code with arbitrary indentation */

int n, r;

n = 9;

r = 1;

while (n > 0) {

r *= n;

n--;

}The code still would execute correctly, even though it’s rather difficult to read. The Python equivalent is

# This is Python code.

n = 9

r = 1

while n > 0:

r = r * n # Python also supports C-style r *= n.

n = n - 1 # Python also supports n –= 1.Python doesn’t use braces to indicate code structure; instead, the indentation itself is used. The last two lines of the previous code are the body of the while loop because they come immediately after the while statement and are indented one level further than the while statement. If those lines weren’t indented, they wouldn’t be part of the body of the while.

Note that while it’s more explicit and clearer to say r = r * n, it’s also possible and good Pythonic style to use the shorter r *= n form—both are fine in Python.

Using indentation to structure code rather than braces may take some getting used to, but there are significant benefits:

It’s impossible to have missing or extra braces. You never need to hunt through your code for the brace near the bottom that matches the one a few lines from the top.

You probably use consistent indentation in your code already, so this won’t be a big step. If you’re using IDLE or one of the most common coding editors or IDEs—Emacs, VIM, VS Code, or PyCharm, to name a few—it will automatically indent lines. You just need to backspace out of levels of indentation when that block is finished. One thing that may trip you up once or twice until you get used to it is the fact that the Python interpreter returns an error message if you have a space (or spaces) preceding the commands you enter in a Jupyter notebook cell or at a Python shell’s >>> prompt.

For the most part, anything following a # symbol in a Python file is a comment and is disregarded by the interpreter. The obvious exception is a # in a string, which is just a character of that string:

You’ll put comments in Python code frequently.

The most commonly used command in Python is assignment, which looks pretty close to what you might’ve used in other languages. Python code to create a variable called x and assign that variable to the value 5 is

In Python, unlike in many other computer languages, neither a variable type declaration nor an end-of-line delimiter (like a ;) is necessary. The line is ended by the end of the line. Variables are created automatically when they’re first assigned.

Variable names are case sensitive and can include any alphanumeric character as well as underscores but must start with a letter or underscore. See section 4.11 for more guidance on the Pythonic style for creating variable names.

The name variable is somewhat misleading in Python; name or label would be more accurate. However, it seems that pretty much everyone calls variables variables at some time or another. Whatever you call them, you should know how they really work in Python.

A common, but inaccurate, explanation is that a variable is a container that stores a value, somewhat like a bucket. This would be reasonable for many programming languages (C, for example).

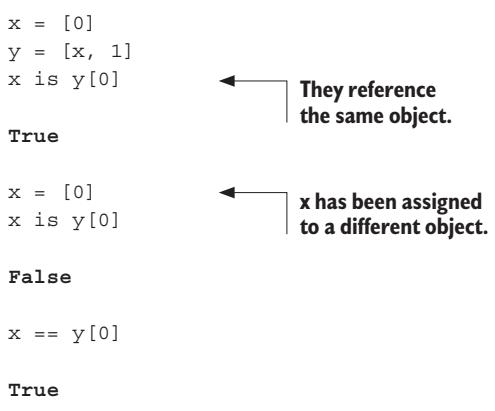

However, in Python variables aren’t buckets. Instead, they’re labels or tags that refer to objects in the Python interpreter’s namespace. Any number of labels (or variables) can refer to the same object, and when that object changes, the value referred to by all of those variables also changes.

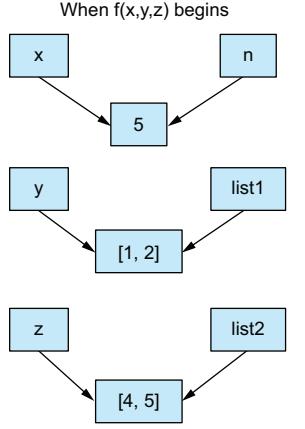

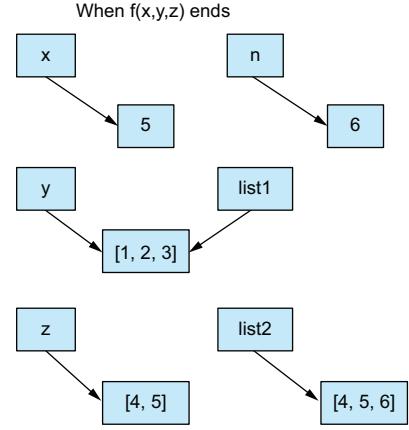

To see what this means, look at the following simple code:

If you’re thinking of variables as containers, this result makes no sense. How could changing the contents of one container simultaneously change the other two? However, if variables are just labels referring to objects, it makes sense that changing the object that all three labels refer to would be reflected everywhere.

If the variables are referring to constants or immutable values, this distinction isn’t quite as clear:

Because the objects they refer to can’t change, the behavior of the variables in this case is consistent with either explanation. In fact, in this case, after the third line, a, b, and c all refer to the same unchangeable integer object with the value 1. The next line, b = 5, makes b refer to the integer object 5 but doesn’t change the references of a or c.

Python variables can be set to any object, whereas in C and many other languages, variables can store only the type of value they’re declared as. The following is perfectly legal Python code:

x starts out referring to the string object “Hello” and then refers to the integer object 5. Of course, this feature can be abused, because arbitrarily assigning the same variable name to refer successively to different data types can make code confusing.

A new assignment overrides any previous assignments. The del statement deletes the variable (but not necessarily the object it was attached to). Trying to print the variable’s object after deleting it results in an error, as though the variable had never been created in the first place:

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-2-260d38800877> in <cell line: 5>()

3

4 del x

----> 5 print(x) # <-- Line where error occurs

NameError: name 'x' is not defined # <-- Name of exception that occurredHere, you have your first look at a traceback, which is printed when an error, called an exception, has been detected. Notice that when we try to access a variable after we’ve deleted it, there is a line of dashes followed by the name of the exception, a NameError. The traceback has a little arrow —-> pointing to the line with the error. The last line explains the exception that was detected, which in this case is a NameError exception because x is no longer defined or valid after its deletion.

In general, the full dynamic call structure of the existing function at the time of the error’s occurrence is returned. If you’re using another Python environment, you might obtain the same information with some small differences. The traceback could look something like the following:

Traceback (most recent call last):

File "/home/naomi/Dropbox/QPB4/code/vscode/ch04.py", line 5, in <module>

print(x)

^ # <-- Error location indicated with a ^ under the problem

NameError: name 'x' is not defined

This format is a bit simpler, with just a ^ under the error on the next line, but the same information is there.

Chapter 14 describes this mechanism in more detail. A full list of the possible exceptions and what causes them is in the Python standard library documentation. Use the index to find any specific exception (such as NameError) you receive.

While Python does not require that you specify types for variables (and function parameters and return values, etc.), current versions of Python do allow you to do so. As mentioned in the previous chapter, in Python many times the type of an object referred to by a variable, or needed as a parameter, or returned by a function or method is not always immediately obvious.

Mixing incompatible types of objects will cause a runtime exception in Python, but it will not raise an error at compile time. Particularly for large projects, there are many times when having the types of objects more explicitly available would be useful. For this, Python has added type hints.

The type hinting notation can be read by type-checking tools like mypy, pyright, pyre, or pytype, as well as several common IDEs, to flag the use of an incompatible or unexpected type. While these tools can report the error, Python itself will not raise a runtime error if the type hints are not followed.

For example, we can create a simple function to add two numbers and use type hinting to indicate that it should only receive and return objects of type int:

def add_ints(x: int, y: int) -> int: # <-- Parameters and return value noted as ints

return x + y

p: int = 2.3 # <-- Variable p marked as int but set to float

z = add_ints(1, 2)

w = add_ints(1.5, 2.5) # <-- Function called with floatsThe function definition specifies that both of the parameters and the return value will be of type int. In the first line of code after the function, we specify that the variable p should be of type int but then try to set it to a float. Then the function add_ints is called first with two integers and then with two floats.

If we run this code, Python will execute it without any warning or complaint. However, if we save this code in a file test_types.py and run the mypy checker on that file, three errors will be flagged:

test\_types.py:4: error: Incompatible types in assignment (expression has

➥type "float", variable has type "int") [assignment] # <-- Flags setting int variable p to a float

test_types.py:8: error: Argument 1 to "add_ints" has incompatible type

➥"float"; expected "int" [arg-type] # <-- Flags calling function with two floats instead of ints

test_types.py:8: error: Argument 2 to "add_ints" has incompatible type

➥"float"; expected "int" [arg-type] # <-- Flags calling function with two floats instead of ints

Found 3 errors in 1 file (checked 1 source file)When you run the mypy type checker on this code, it reports an error with setting the variable p to 2.3, not because 2.3 is a bad value for p but because the annotation indicated that it should be an int. It also flags two errors in the second call to add_ints, since both of the parameters are floats, but the function is marked as taking two ints. In both cases, Python will run the code without complaint, but the things marked by mypy indicate some confusion between intention and actual use, and that confusion is likely to lead to bugs later on.

One reason that type hints are increasingly used is that they help reduce confusion as to what type is expected, in many situations. In a large codebase, it can be particularly frustrating to hunt a bug where an unexpected type of object is returned. Type hints allow many such situations to be caught and fixed before runtime. Type information also allows many editors and IDEs to warn about such errors and suggest appropriate alternatives during the coding process.

While type hints can be useful in many situations, there are situations where they may not be worth the trouble:

Fortunately, since type hints are optional, you don’t have to add type hints for everything all at once. If you have decided it’s a good idea to use type hints, you can add them progressively, starting with the places where having the type information handy will do the most good.

In this text, we will not routinely use type hints in the short code examples, but we will use them in some of the longer examples and answers to the lab exercises.

Python supports arithmetic and similar expressions; these expressions will be familiar to most readers. The following code calculates the average of 3 and 5, leaving the result in the variable z:

Note that arithmetic operators involving only integers do not always return an integer. Even though all the values are integers, division (starting with Python 3) returns a floating-point number, so the fractional part isn’t truncated. If you want traditional integer division returning an integer, you can use // instead.

Standard rules of arithmetic precedence apply. If you’d left out the parentheses in the last line, the code would’ve been calculated as x + (y / 2).

Expressions don’t have to involve just numerical values; strings, Boolean values, and many other types of objects can be used in expressions in various ways. I discuss these objects in more detail as they’re used.

In a Jupyter notebook, create some variables. What happens when you try to put spaces, dashes, or other nonalphanumeric characters in the variable name? Play around with a few complex expressions, such as x = 2 + 4 * 5 – 6 / 3. Use parentheses to group the numbers in different ways and see how the result changes compared with the original ungrouped expression.

You’ve already seen that Python, like most other programming languages, indicates strings through the use of double quotes. This line leaves the string “Hello, World” in the variable x:

x = “Hello, World”

Backslashes can be used to escape characters—to give them special meanings. \n means the newline character, \t means the tab character, \\ means a single normal backslash character, and \" is a plain double-quote character. It doesn’t end the string:

You can use single quotes instead of double quotes. The following two lines do the same thing:

The only difference is that you don’t need to backslash ” characters in single-quoted strings or ’ characters in double-quoted strings:

x = "Don't need a backslash"

x = 'Can\'t get by without a backslash'

x = "Backslash your \" character!"

x = 'You can leave the " alone'You can’t split a normal string across lines. This code won’t work:

# This Python code will cause an ERROR -- you can't

# split the string across two lines.

x = "This is a misguided attempt to

put a newline into a string without using backslash-n"File "<ipython-input-3-dc07520d5086>", line 2

x = "This is a misguided attempt to

^

SyntaxError: unterminated string literal (detected at line 2)But Python offers triple-quoted strings, which let you have multiline strings and include single and double quotes without backslashes:

x = """Starting and ending a string with triple " or ' characters

permits embedded newlines, and the use of " and ' without

backslashes"""Now x is the entire sentence between the """ delimiters. [You can use triple single quotes (''') instead of triple double quotes to do the same thing.]

Python offers enough string-related functionality that chapter 6 is devoted to the topic.

Because you’re probably familiar with standard numeric operations from other languages, this book doesn’t contain a separate chapter describing Python’s numeric abilities. This section describes the unique features of Python numbers, and the Python documentation lists the available functions.

Python offers four kinds of numbers: integers, floats, complex numbers, and Booleans. An integer constant is written as an integer—0, –11, +33, 123456—and has unlimited range, restricted only by the resources of your machine. A float can be written with a decimal point or in scientific notation: 3.14, –2E-8, 2.718281828. The precision of these values is governed by the underlying machine but is typically equal to double (64-bit) types in C. Complex numbers are probably of limited interest and are discussed separately later in the section. Booleans are either True or False and behave identically to 1 and 0 except for their string representations.

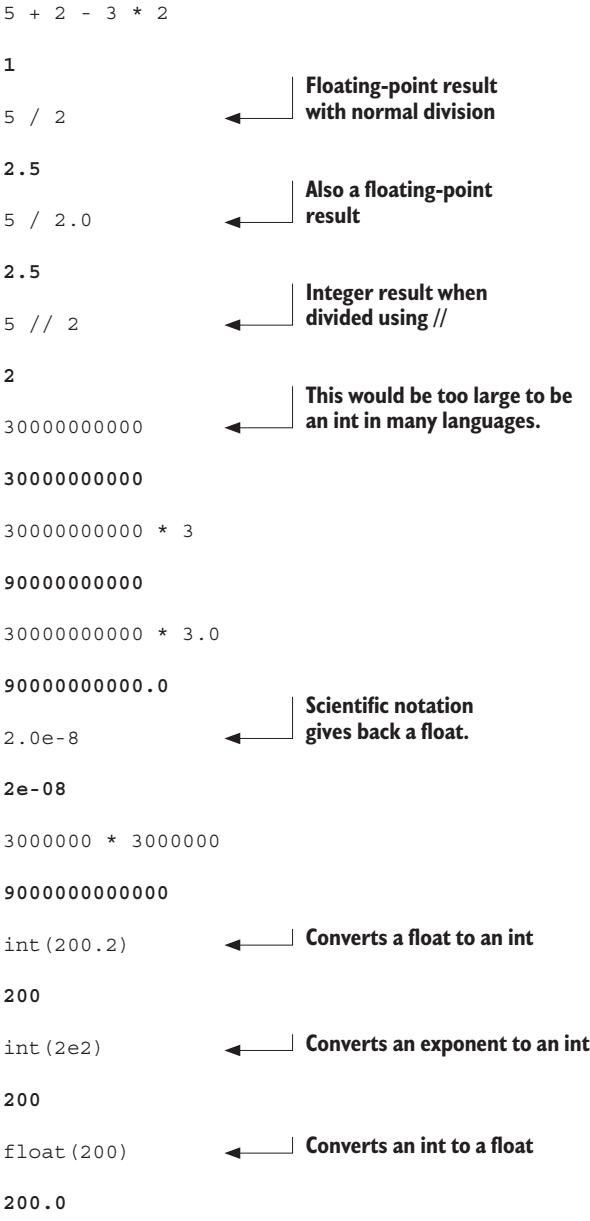

Arithmetic is much like it is in C. Operations involving two integers produce an integer, except for division (/), which results in a float. If the // division symbol is used, the result is an integer, rounding down. Operations involving a float always produce a float. Here are a few examples:

The last three commands are explicit conversions between types. Note that converting from a float to an int will truncate the value and discard the decimal portion. On the other hand, converting an int to a float will always add a .0.

Numbers in Python have two advantages over C or Java: integers can be arbitrarily large, and the division of two integers results in a float.

Python provides the following number-related functions as part of its core:

abs, divmod, float, hex, int, max, min, oct, pow, roundSee the documentation for the details of how each works.

More advanced numeric functions, such as the trig and hyperbolic trig functions, as well as a few useful constants, aren’t built into Python but are provided in a standard module called math. I explain modules in detail later. For now, it’s sufficient to know that you must make the math functions in this section available by starting your Python program or interactive session with the statement

The math module provides the following functions and constants:

acos, asin, atan, atan2, ceil, cos, cosh, e, exp, fabs, floor, fmod,

frexp, hypot, ldexp, log, log10, mod, pi, pow, sin, sinh, sqrt, tan, tanhSee the documentation for details.

The core Python installation isn’t well suited to intensive numeric computation because of speed constraints. But the powerful Python extension NumPy provides highly efficient implementations of many advanced numeric operations. The emphasis is on array operations, including multidimensional matrices and more advanced functions such as the fast Fourier transform. You should be able to find NumPy (or links to it) at www.scipy.org.

Complex numbers are created automatically whenever an expression of the form nj is encountered, with n having the same form as a Python integer or float. j is, of course, standard notation for the imaginary number equal to the square root of –1, for example:

Note that Python expresses the resulting complex number in parentheses as a way of indicating that what’s printed to the screen represents the value of a single object:

Calculating j * j gives the expected answer of –1, but the result remains a Python complex-number object. Complex numbers are never converted automatically to equivalent real or integer objects. But you can easily access their real and imaginary parts with real and imag:

Note that real and imaginary parts of a complex number are always returned as floating-point numbers.

The functions in the math module don’t apply to complex numbers; the rationale is that most users want the square root of –1 to generate an error, not an answer! Instead, similar functions, which can operate on complex numbers, are provided in the cmath module:

acos, acosh, asin, asinh, atan, atanh, cos, cosh, e, exp, log, log10,

pi, sin, sinh, sqrt, tan, tanh.To make clear in the code that these functions are special-purpose complex-number functions and to avoid name conflicts with the more normal equivalents, it’s best to import the cmath module with

and then to explicitly refer to the cmath package when using the function:

This is a good example of why it’s best to minimize the use of the from

See chapter 10 for more details on how to use modules and module names.

The important thing to keep in mind is that by importing the cmath module, you can do almost anything with complex numbers that you can do with other numbers.

In a Jupyter notebook, create some string and number variables (integers, floats, and complex numbers). Experiment a bit with what happens when you do operations with them, including across types. Can you multiply a string by an integer, for example, or can you multiply it by a float or complex number? Also load the math module and try a few of the functions; then load the cmath module and do the same. What happens if you try to use one of those functions on an integer or float after loading the cmath module? How might you get the math module functions back?

In addition to standard types such as strings and numbers, Python has a special basic data type that defines a single special data object called None. As the name suggests, None is used to represent an empty value. It appears in various guises throughout Python. For example, a procedure in Python is just a function that doesn’t explicitly return a value, which means that, by default, it returns None.

None is often useful in day-to-day Python programming as a placeholder to indicate a point in a data structure where meaningful data will eventually be found, even though that data hasn’t yet been calculated. You can easily test for the presence of None because there’s only one instance of None in the entire Python system (all references to None point to the same object), and None is equivalent only to itself.

You can use the input() function to get input from the user. Use the prompt string you want to display to the user as input’s parameter:

Name? JaneAge? 28This is a fairly simple way to get user input. The one catch is that the input comes in as a string, so if you want to use it as a number, you have to use the int() or float() function to convert it to an int or float.

Experiment with the input() function to get string and integer input. Using code similar to the previous code, what is the effect of not using int() around the call to input()for integer input? Can you modify that code to accept a float—say, 28.5? What happens if you deliberately enter the wrong type of value? Examples include a float in which an integer is expected and a string in which a number is expected—and vice versa.

Python provides various built-in operators, from the standard (+, *, and so on) to the more esoteric, such as operators for performing bit shifting, bitwise logical functions, and so forth. Most of these operators are no more unique to Python than to any other language; hence, I won’t explain them in the main text. You can find a complete list of the Python built-in operators in the documentation.

Python has relatively few limitations on coding style with the obvious exception of the requirement to use indentation to organize code into blocks. Even in that case, the amount of indentation and type of indentation (tabs versus spaces) isn’t mandated. However, there are preferred stylistic conventions for Python, contained in Python Enhancement Proposal (PEP) 8, which is summarized in appendix A and available online at <www.python.org/dev/peps/pep-0008/>. A selection of Pythonic conventions is provided in table 4.1, but to fully absorb Pythonic style, periodically reread PEP 8.

| Situation | Suggestion | Example |

|---|---|---|

| Module/package names | Short, all lowercase, underscores only if needed | imp, sys |

| Function names | All lowercase, underscores_for_readablitiy |

foo(), my_func() |

| Variable names | All lowercase, underscores_for_readablitiy |

my_var |

| Class names | CapitalizeEachWord | MyClass |

| Constant names | ALL_CAPS_WITH_UNDERSCORES | PI, TAX_RATE |

| Indentation | Four spaces per level, no tabs | |

| Comparisons | Don’t compare explicitly to True or False. | if my_var:if not my_var: |

I strongly urge you to follow the conventions of PEP 8. They’re wisely chosen and time tested, and they’ll make your code easier for you and other Python programmers to understand.

Which of the following variable and function names do you think are not good Pythonic style? Why?

bar(), varName, VERYLONGVARNAME, foobar, longvarname,

foo_bar(), really_very_long_var_nameIn this chapter, I discuss the two major Python sequence types: lists and tuples. At first, lists may remind you of arrays in many other languages, but don’t be fooled: lists are a good deal more flexible and powerful than plain arrays.

Tuples are like lists that can’t be modified; you can think of them as a restricted type of list or as a basic record type. I discuss the need for such a restricted data type later in the chapter. This chapter also discusses another Python collection type: sets. Sets are useful when an object’s membership in the collection, as opposed to its position, is important.

Most of the chapter is devoted to lists, because if you understand lists, you pretty much understand tuples. The last part of the chapter discusses the differences between lists and tuples in both functional and design terms.

A list in Python is similar to an array in Java or C or any other language; it’s an ordered collection of objects. You create a list by enclosing a comma-separated list of elements in square brackets, as follows:

Note that you don’t have to worry about declaring the list or fixing its size ahead of time. This line creates the list as well as assigns it, and a list automatically grows or shrinks as needed.

A typed array module available in Python provides arrays based on C data types. Information on its use can be found in the documentation for the Python standard library. I suggest that you look into it only if you really need performance improvement. If a situation calls for numerical computations, you should consider using NumPy, mentioned in chapter 4 and available at www.scipy.org/.

Unlike lists in many other languages, Python lists can contain different types of elements; a list element can be any Python object. The following is a list that contains a variety of elements:

Probably the most basic built-in list function is the len function, which returns the number of elements in a list:

Note that the len function doesn’t count the items in the inner, nested list.

len()

What would len() return for each of the following? [0]; []; [[1, 3, [4, 5], 6], 7]

Understanding how list indices work will make Python much more useful to you. Please read the whole section!

Elements can be extracted from a Python list by using a notation like C’s array indexing. Like C and many other languages, Python starts counting from 0; asking for element 0 returns the first element of the list, asking for element 1 returns the second element, and so forth. The following are a few examples:

But Python indexing is more flexible than C indexing. If indices are negative numbers, they indicate positions counting from the end of the list, with –1 being the last position in the list, –2 being the second-to-last position, and so forth. Continuing with the same list x, you can do the following:

For operations involving a single list index, it’s generally satisfactory to think of the index as pointing at a particular element in the list. For more advanced operations, it’s more correct to think of list indices as indicating positions between elements. In the list [“first”, “second”, “third”, “fourth”], you can think of the indices as pointing as follows.

| x =[ | “first”, | “second”, | “third”, | “fourth” | ] | ||||

|---|---|---|---|---|---|---|---|---|---|

| Positive indices | 0 | 1 | 2 | 3 | |||||

| Negative indices | –4 | –3 | –2 | –1 |

This is irrelevant when you’re extracting a single element, but Python can extract or assign to an entire sublist at once—an operation known as slicing. Instead of entering list[index] to extract the item just after index, enter list[index1:index2] to extract all items, including index1 and up to (but not including) index2, into a new list. The following are some examples:

x = ["first", "second", "third", "fourth"]

x[1:-1]

#> ['second', 'third']

x[0:3]

#> ['first', 'second', 'third']

x[-2:-1]

['third']It may seem reasonable that if the second index indicates a position in the list before the first index, this code would return the elements between those indices in reverse order, but this isn’t what happens. Instead, this code returns an empty list:

When slicing a list, it’s also possible to leave out index1 or index2. Leaving out index1 means “Go from the beginning of the list,” and leaving out index2 means “Go to the end of the list”:

Omitting both indices makes a new list that goes from the beginning to the end of the original list—that is, copies the list. This technique is useful when you want to make a copy that you can modify without affecting the original list:

y = x[:]

y[0] = '1 st'

y

#> ['1 st', 'second', 'third', 'fourth']

x

#> ['first', 'second', 'third', 'fourth']Using what you know about the len() function and list slices, how would you combine the two to get the second half of a list when you don’t know what size it is? Experiment in the Python shell to confirm that your solution works.

You can use list index notation to modify a list as well as to extract an element from it. Put the index on the left side of the assignment operator:

Slice notation can be used here too. Saying something like lista[index1:index2] = listb causes all elements of lista between index1 and index2 to be replaced by the elements in listb. listb can have more or fewer elements than are removed from lista, in which case the length of lista is altered. You can use slice assignment to do several things, as shown in the following:

x = [1, 2, 3, 4]

x[len(x):] = [5, 6, 7] # <-- Appends a list to end of a list

x

#> [1, 2, 3, 4, 5, 6, 7]

x[:0] = [-1, 0] # <-- Appends a list to front of a list

x

#> [-1, 0, 1, 2, 3, 4, 5, 6, 7]

x[1:-1] = [] # <-- Removes elements from a list

x

#> [-1, 7]Appending a single element to a list is such a common operation that there’s a special append method for it:

One problem can occur if you try to append one list to another. The list gets appended as a single element of the main list:

The extend method is like the append method except that it allows you to add one list to another:

There’s also a special insert method to insert new list elements between two existing elements or at the front of the list. insert is used as a method of a list object and takes two additional arguments. The first additional argument is the index position in the list where the new element should be inserted, and the second is the new element itself:

x = [1, 2, 3]

x.insert(2, "hello")

x

#> [1, 2, 'hello', 3]

x.insert(0, "start")

x

#> ['start', 1, 2, 'hello', 3]insert understands list indices as discussed in section 5.2, but for most uses, it’s easiest to think of list.insert(n, elem) as meaning insert elem just before the nth element of the list. insert is just a convenience method. Anything that can be done with insert can also be done with slice assignment. That is, list.insert(n, elem) is the same thing as list[n:n] = [elem] when n is nonnegative. Using insert makes for somewhat more readable code, and insert even handles negative indices:

The del statement is the preferred method of deleting list items or slices. It doesn’t do anything that can’t be done with slice assignment, but it’s usually easier to remember and easier to read:

In general, del list[n] does the same thing as list[n:n+1] = [], whereas del list[m:n] does the same thing as list[m:n] = [].

The remove method isn’t the inverse of insert. Whereas insert inserts an element at a specified location, remove looks for the first instance of a given value in a list and removes that value from the list:

x = [1, 2, 3, 4, 3, 5]

x.remove(3)

x

#> [1, 2, 4, 3, 5]

x.remove(3)

x

#> [1, 2, 4, 5]

x.remove(3)

#> ---------------------------------------------------------------------------

#> ValueError Traceback (most recent call last)

#> <ipython-input-9-be7b9eddb459> in <cell line: 1>()

#> ----> 1 x.remove(3)

#> ValueError: list.remove(x): x not in listIf remove can’t find anything to remove, it raises an error. You can catch this error by using the exception-handling abilities of Python, or you can avoid the problem by using in to check for the presence of something in a list before attempting to remove it (see section 5.5.1 for examples of in).

The reverse method is a more specialized list modification method. It efficiently reverses a list in place:

Suppose that you have a list of 10 items. How might you move the last three items from the end of the list to the beginning, keeping them in the same order?

Lists can be sorted by using the built-in Python sort method:

This method does an in-place sort—that is, changes the list being sorted. To sort a list without changing the original list, you have two options. You can use the sorted() built-in function, discussed in section 5.4.2, or you can make a copy of the list and sort the copy:

x = [2, 4, 1, 3]

y = x[:] # <-- A full list slice makes a copy of the list.

y.sort() # <-- Sorts method on copy, not original

y

#> [1, 2, 3, 4]

x

#> [2, 4, 1, 3]Note that here we used the [:] notation to make a slice of the entire list. Since slicing creates a new list, this in effect creates a copy of the entire list, which we can then sort using the sort() method.

Sorting works with strings too:

The sort method can sort just about anything because Python can compare just about anything. But there’s one caveat in sorting: the default key method used by sort requires all items in the list to be of comparable types. That means that using the sort method on a list containing both numbers and strings raises an exception:

x = [1, 2, 'hello', 3]

x.sort()

#> ---------------------------------------------------------------------------

#> TypeError Traceback (most recent call last)

#> <ipython-input-8-9c6228d80c69> in <cell line: 2>()

#> 1 x = [1, 2, 'hello', 3]

#> ----> 2 x.sort()

#> 3

#> TypeError: '<' not supported between instances of 'str' and 'int'Conversely, you can sort a list of lists:

According to the built-in Python rules for comparing complex objects, the sublists are sorted first by the ascending first element and then by the ascending second element.

sort is even more flexible; it has an optional reverse parameter that causes the sort to be in reverse order when reverse=True, and it’s possible to use your own key function to determine how elements of a list are sorted.

To use custom sorting, you need to be able to define functions—something I haven’t talked about yet. In this section, I also discuss the fact that len(string) returns the number of characters in a string. String operations are discussed more fully in chapter 6.

By default, sort uses built-in Python comparison functions to determine ordering, which is satisfactory for most purposes. At times, though, you want to sort a list in a way that doesn’t correspond to this default ordering. Suppose you want to sort a list of words by the number of characters in each word, as opposed to the lexicographic sort that Python normally carries out.

To do this, write a function that returns the value, or key, that you want to sort on and use it with the sort method. That function in the context of sort is a function that takes one argument and returns the key or value that the sort function is to use.

For number-of-characters ordering, a suitable key function could be

This key function is trivial. It passes the length of each string back to the sort method, rather than the strings themselves.

After you define the key function, using it is a matter of passing it to the sort method by using the key keyword. Because functions are Python objects, they can be passed around like any other Python objects. Here’s a small program that illustrates the difference between a default sort and your custom sort:

def comp_num_of_chars(string1):

return len(string1)

word_list = ['Python', 'is', 'better', 'than', 'C']

word_list.sort()

print(word_list)

#> ['C', 'Python', 'better', 'is', 'than']

word_list = ['Python', 'is', 'better', 'than', 'C']

word_list.sort(key=comp_num_of_chars)

print(word_list)

#> ['C', 'is', 'than', 'Python', 'better']The first list is in lexicographical order (with uppercase coming before lowercase), and the second list is ordered by ascending number of characters.

It’s also possible to use an anonymous lambda function in the sort() call itself. This can be handy if the sort function is very short (which it usually is) and only used for sorting (again, as it should be). The code using the function comp_num_of_chars could also be written with a lambda function as

The lambda is defined with three elements: a variable for the parameter, in this case x; a colon; and the return value, in this example len(x). For simple functions with only a simple return value, a lambda function saves having to create more function names, and you can see how the sort key works without having to look at the function elsewhere.

Custom sorting is very useful, but if performance is critical, it may be slower than the default. Usually, this effect is minimal, but if the key function is particularly complex, the effect may be more than desired, especially for sorts involving hundreds of thousands or millions of elements.

One particular place to avoid custom sorts is where you want to sort a list in descending, rather than ascending, order. In this case, use the sort method’s reverse parameter set to True. If for some reason you don’t want to do that, it’s still better to sort the list normally and then use the reverse method to invert the order of the resulting list. These two operations together—the standard sort and the reverse—will still be much faster than a custom sort.

Lists have a built-in method to sort themselves, but other iterables in Python, such as the keys of a dictionary, don’t have a sort method. Python also has the built-in function sorted(), which returns a sorted list from any iterable. sorted() uses the same key and reverse parameters as the sort method:

Suppose that you have a list in which each element is in turn a list: [[1, 2, 3], [2, 1, 3], [4, 0, 1]]. If you wanted to sort this list by the second element in each list so that the result would be [[4, 0, 1], [2, 1, 3], [1, 2, 3]], what function would you write to pass as the key value to the sort() method?

Several other list methods are frequently useful, but they don’t fall into any specific category.

It’s easy to test whether a value is in a list by using the in operator, which returns a Boolean value. You can also use the inverse: the not in operator:

To create a list by concatenating two existing lists, use the + (list concatenation) operator, which leaves the argument lists unchanged:

Use the * operator to produce a list of a given size, which is initialized to a given value. This operation is a common one for working with large lists whose size is known ahead of time. Although you can use append to add elements and automatically expand the list as needed, you obtain greater efficiency by using * to correctly size the list at the start of the program. A list that doesn’t change in size doesn’t incur any memory reallocation overhead:

When used with lists in this manner, * (which in this context is called the list multiplication operator) replicates the given list the indicated number of times and joins all the copies to form a new list. This is the standard Python method for defining a list of a given size ahead of time. A list containing a single instance of None is commonly used in list multiplication, but the list can be anything:

You can use min and max to find the smallest and largest elements in a list. You’ll probably use min and max mostly with numerical lists, but you can use them with lists containing any type of element. Trying to find the maximum or minimum object in a set of objects of different types causes an error if comparing those types doesn’t make sense:

max([4, "Hello", [1, 2]])

#> ---------------------------------------------------------------------------

#> TypeError Traceback (most recent call last)

#> <ipython-input-7-15ab1869d5d5> in <cell line: 1>()

#> ----> 1 max([4, "Hello", [1, 2]])

#> TypeError: '>' not supported between instances of 'str' and 'int'If you want to find where in a list a value can be found (rather than wanting to know only whether the value is in the list), use the index method. This method searches through a list looking for a list element equivalent to a given value and returns the position of that list element:

x = [1, 3, "five", 7, -2]

x.index(7)

#> 3

x.index(5)

#> ---------------------------------------------------------------------------

#> ValueError Traceback (most recent call last)

#> <ipython-input-6-96ad5df81983> in <cell line: 1>()

#> ----> 1 x.index(5)

#> ValueError: 5 is not in listAttempting to find the position of an element that doesn’t exist in the list raises an error, as shown here. This error can be handled in the same manner as the analogous error that can occur with the remove method (that is, by testing the list with in before using index).

count also searches through a list, looking for a given value, but it returns the number of times that the value is found in the list rather than positional information:

You can see that lists are very powerful data structures, with possibilities that go far beyond those of plain old arrays. List operations are so important in Python programming that it’s worth laying them out for easy reference, as shown in table 5.1.

| List operation | Explanation | Example |

|---|---|---|

[] |

Creates an empty list | x = [] |

len |

Returns the length of a list | len(x) |

append |

Adds a single element to the end of a list | x.append('y') |

extend |

Adds another list to the end of the list | x.extend(['a', 'b']) |

insert |

Inserts a new element at a given position in the list | x.insert(0, 'y') |

del |

Removes a list element or slice | del(x[0]) |

remove |

Searches for and removes a given value from a list | x.remove('y') |

reverse |

Reverses a list in place | x.reverse() |

sort |

Sorts a list in place | x.sort() |

+ |

Adds two lists together | x1 + x2 |

* |

Replicates a list | x = ['y'] * 3 |

min |

Returns the smallest element in a list | min(x) |

max |

Returns the largest element in a list | max(x) |

index |

Returns the position of a value in a list | x.index['y'] |

count |

Counts the number of times a value occurs in a list | x.count('y') |

sum |

Sums the items (if they can be summed) | sum(x) |

in |

Returns whether an item is in a list | 'y' in x |

Being familiar with these list operations will make your life as a Python coder much easier.

What would be the result of len([[1,2]] * 3)? What are two differences between using the in operator and a list’s index() method? Which of the following will raise an exception? min(["a", "b", "c"]); max([1, 2, "three"]); [1, 2, 3].count("one")

If you have a list x, write the code to safely remove an item if—and only if—that value is in the list. Modify that code to remove the element only if the item occurs in the list more than once.

This section covers another advanced topic that you may want to skip if you’re just learning the language.

Lists can be nested. One application of nesting is to represent two-dimensional matrices. The members of these matrices can be referred to by using two-dimensional indices. Indices for these matrices work as follows:

m = [[0, 1, 2], [10, 11, 12], [20, 21, 22]]

m[0]

#> [0, 1, 2]

m[0][1]

#> 1

m[2]

#> [20, 21, 22]

m[2][2]

#> 22This mechanism scales to higher dimensions in the manner you’d expect.

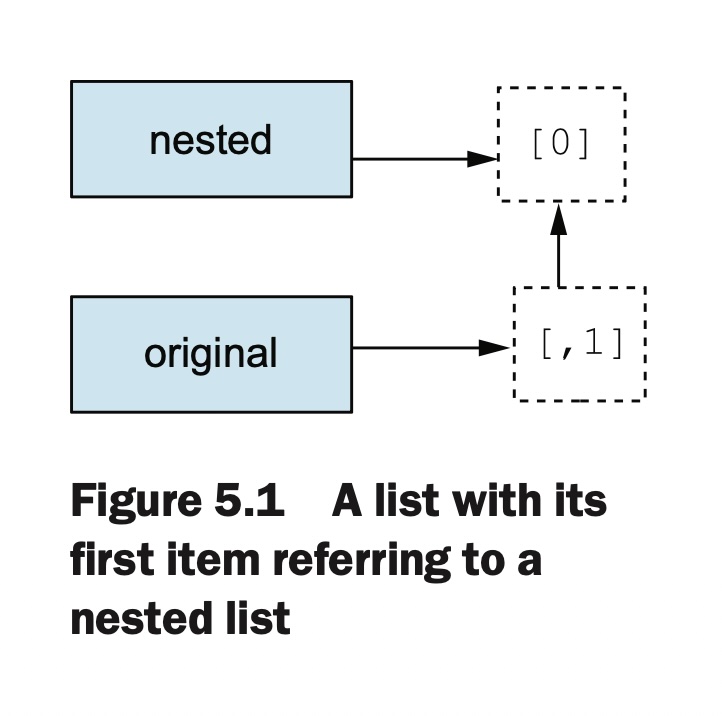

Most of the time, this is all you need to concern yourself with. But you may run into a problem with nested lists—specifically, the way that variables refer to objects and how some objects (such as lists) can be modified (are mutable). An example is the best way to illustrate:

Figure 5.1 shows what this example looks like.

Now the value in the nested list can be changed by using either the nested or the original variables:

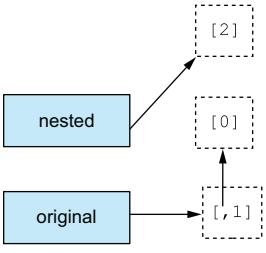

But if nested is set to another list, the connection between them is broken:

Figure 5.2 illustrates this condition.

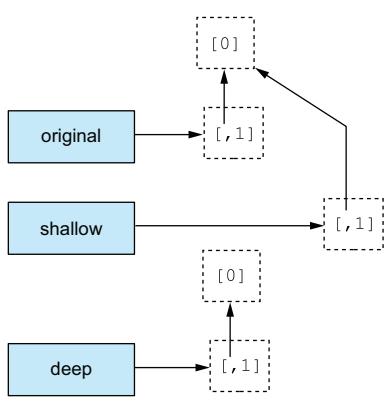

You’ve seen that you can obtain a copy of a list by taking a full slice (that is, x[:]). You can also obtain a copy of a list by using the + or * operators (for example, x + [] or x * 1). These techniques are slightly less efficient than the slice method. All three create what is called a shallow copy of the list, which is probably what you want most of the time. But if your list has other lists nested in it, you may want to make a deep copy. You can do this with the deepcopy function of the copy module:

See figure 5.3 for an illustration.

The lists pointed at by the original or shallow variables are connected. Changing the value in the nested list through either one of them affects the other:

shallow[1] = 2

shallow

#> [[0], 2]

original

#> [[0], 2]

shallow[0][0] = 'zero'

original

#> [['zero'], 1]The deep copy is independent of the original, and no change to it has any effect on the original list:

This behavior is the same for any other nested objects in a list that are modifiable (such as dictionaries).

Now that you’ve seen what lists can do, it’s time to look at tuples.

Suppose that you have the following list: x = [[1, 2, 3], [4, 5, 6], [7, 8, 9]] What code could you use to get a copy y of that list in which you could change the elements without the side effect of changing the contents of x?

Tuples are data structures that are very similar to lists, but they can’t be modified; they can only be created. Tuples are so much like lists that you may wonder why Python bothers to include them. The reason is that tuples have important roles that can’t be efficiently filled by lists, such as keys for dictionaries.

Creating a tuple is similar to creating a list: assign a sequence of values to a variable. A list is a sequence that’s enclosed by [ and ]; a tuple is a sequence that’s enclosed by ( and ):

This line creates a three-element tuple.

After a tuple is created, using it is so much like using a list that it’s easy to forget that tuples and lists are different data types:

x[2]

#> 'c'

x[1:]

#> ('b', 'c')

len(x)

#> 3

max(x)

#> 'c'

min(x)

#> 'a'

5 in x

#> False

5 not in x

#> TrueThe main difference between tuples and lists is that tuples are immutable. An attempt to modify a tuple results in a confusing error message, which is Python’s way of saying that it doesn’t know how to set an item in a tuple:

x[2] = 'd'

#> ---------------------------------------------------------------------------

#> TypeError Traceback (most recent call last)

#> <ipython-input-2-dcc4b983047e> in <cell line: 1>()

#> ----> 1 x[2] = 'd'

#> TypeError: 'tuple' object does not support item assignmentYou can create tuples from existing ones by using the + and * operators:

A “copy” (actually an alias pointing to the original object) of a tuple can be made in any of the same ways as for lists:

Tuples themselves can’t be modified. But if a tuple contains any mutable objects (for example, lists or dictionaries), these objects may be changed if they’re still assigned to their own variables. Tuples that contain mutable objects aren’t allowed as keys for dictionaries.

Because tuples can’t be modified, the shallow copy methods shown here don’t return new objects but aliases pointing to the same object. This is true not only for tuples but also for string and byte objects, which are covered in the next chapter. In practice you can still think of these aliases as “copies” since there is nothing you could do with a true copy that you can’t also do with an alias (and aliases are much faster and more memory efficient).

A small syntactical point is associated with using tuples. Because the square brackets used to enclose a list aren’t used elsewhere in Python, it’s clear that [] means an empty list and that [1] means a list with one element. The same thing isn’t true of the parentheses used to enclose tuples. Parentheses can also be used to group items in expressions to force a certain evaluation order. If you say (x + y) in a Python program, do you mean that x and y should be added and then put into a one-element tuple, or do you mean that the parentheses should be used to force x and y to be added before any expressions to either side come into play?

This situation is a problem only for tuples with one element because tuples with more than one element always include commas to separate the elements, and the commas tell Python that the parentheses indicate a tuple, not a grouping. In the case of oneelement tuples, Python requires that the element in the tuple be followed by a comma to disambiguate the situation. In the case of zero-element (empty) tuples, there’s no problem. An empty set of parentheses must be a tuple because it’s meaningless otherwise:

As a convenience, Python permits tuples of variables to appear on the left side of an assignment operator, in which case variables in the tuple receive the corresponding values from the tuple on the right side of the assignment operator. The following is a simple example:

This example can be written even more simply because Python recognizes tuples in an assignment context even without the enclosing parentheses. The values on the right side are packed into a tuple and then unpacked into the variables on the left side:

One line of code has replaced the following four lines of code:

This technique is a convenient way to swap values between variables. Instead of saying

simply say

To make things even more convenient, Python 3 has an extended unpacking feature, allowing an element marked with * to absorb any number of elements not matching the other elements. Again, some examples make this feature clearer:

x = (1, 2, 3, 4)

a, b, *c = x

a, b, c

#> (1, 2, [3, 4])

a, *b, c = x

a, b, c

#> (1, [2, 3], 4)

*a, b, c = x

a, b, c

#> ([1, 2], 3, 4)

a, b, c, d, *e = x

a, b, c, d, e

#> (1, 2, 3, 4, [])Note that the starred element receives all the surplus items as a list and that if there are no surplus elements, the starred element receives an empty list.

Packing and unpacking can also be performed with lists:

[a, b] = [1, 2]

[c, d] = 3, 4

[e, f] = (5, 6)

(g, h) = 7, 8

i, j = [9, 10]

k, l = (11, 12)

a

#> 1

[b, c, d]

#> [2, 3, 4]

(e, f, g)

#> (5, 6, 7)

h, i, j, k, l

#> (8, 9, 10, 11, 12)# Packing

values = 1, 2, 3

print(values) # (1, 2, 3)

# Unpacking

a, b, c = values

print(a, b, c) # 1 2 3points = [(1, 2), (3, 4), (5, 6), (7, 8)]

# 좌표의 x 값을 구하는 경우

x_coords = [x for x, y in points]

print(x_coords) # [1, 3, 5, 7]

# 두 좌표의 합을 구하는 경우

sums = [x + y for x, y in points]

print(sums) # [3, 7, 11, 15]

data = [[1, 2, 3, 4, 5], [10, 20, 30], [100, 200, 300, 400]]

# 첫 번째 요소와 나머지를 분리

first_rest = [(first, rest) for first, *rest in data]

print(first_rest) # [(1, [2, 3, 4, 5]), (10, [20, 30]), (100, [200, 300, 400])]prompt: 함수 인자의 맥락에서 패킹과 언패킹에 대해서 설명해주세요.

인자 패킹 (Argument Packing)

# *args - 위치 인자 패킹

def add_all(*numbers):

return sum(numbers)

result = add_all(3, 2, 8) # numbers = (3, 2, 8) 튜플로 패킹됨

print(result) # 15

# **kwargs - 키워드 인자 패킹

def add_all(**kwargs):

return sum(kwargs.values())

result = add_all(a=1, b=2, c=3) # kwargs = {'a': 1, 'b': 2, 'c': 3} 딕셔너리로 패킹됨

print(result) # 6인자 언패킹 (Argument Unpacking)

# * - 시퀀스 언패킹

def add_three(a, b, c):

return a + b + c

numbers = [1, 2, 3]

result = add_three(*numbers) # 개별 인자로 언패킹, add_three(1, 2, 3)과 동일

print(result) # 6

coords = (10, 20, 30) # 튜플도 가능

result = add_three(*coords) # add_three(10, 20, 30)과 동일

# ** - 딕셔너리 언패킹

def greet(name, age, city):

return f"안녕하세요, {name}님! {age}세이고 {city}에 사시는군요."

person_info = {'name': '김철수', 'age': 25, 'city': '서울'} # 딕셔너리를 키워드 인자로 언패킹

message = greet(**person_info) # greet(name='김철수', age=25, city='서울')과 동일

print(message) # 안녕하세요, 김철수님! 25세이고 서울에 사시는군요.*, ** 연산자 비교

# * : 시퀀스 언패킹

numbers = [1, 2, 3, 4, 5]

print(*numbers)

# 출력: 1 2 3 4 5 (개별 값으로 분리됨)

# ** : 딕셔너리 언패킹

person = {'name': 'Alice', 'age': 30}

# print(**person) # 오류! print는 키워드 인자를 이렇게 못 받음

print({**person})

# 출력: {'name': 'Alice', 'age': 30}# * : 리스트/튜플 병합

list1 = [1, 2, 3]

list2 = [4, 5, 6]

# * 언패킹으로 병합

merged = [*list1, *list2]

print(merged) # [1, 2, 3, 4, 5, 6]

# 튜플에서도 가능

tuple1 = (1, 2)

tuple2 = (3, 4)

merged_tuple = (*tuple1, *tuple2, 5, 6)

print(merged_tuple) # (1, 2, 3, 4, 5, 6)

# ** : 딕셔너리 병합

dict1 = {'a': 1, 'b': 2}

dict2 = {'c': 3, 'd': 4}

merged_dict = {**dict1, **dict2} # 다음과 동일: merged_dict = dict1 | dict2

print(merged_dict) # {'a': 1, 'b': 2, 'c': 3, 'd': 4}def greet(first, last, age):

print(f"{first} {last} is {age} years old")

# * : 위치 인자로 전달

args = ['Alice', 'Smith', 30]

greet(*args) # greet('Alice', 'Smith', 30)과 동일

# 출력: Alice Smith is 30 years old

# ** : 키워드 인자로 전달

kwargs = {'first': 'Bob', 'last': 'Jones', 'age': 25}

greet(**kwargs) # greet(first='Bob', last='Jones', age=25)와 동일

# 출력: Bob Jones is 25 years old

# 혼합 사용

args = ['Charlie', 'Brown']

kwargs = {'age': 35}

greet(*args, **kwargs) # greet('Charlie', 'Brown', age=35)

# 출력: Charlie Brown is 35 years old# *args : 가변 위치 인자 받기

def sum_all(*args):

print(f"받은 인자들: {args}") # 튜플로 받음

return sum(args)

print(sum_all(1, 2, 3, 4, 5))

# 받은 인자들: (1, 2, 3, 4, 5)

# 15

# **kwargs : 가변 키워드 인자 받기

def print_info(**kwargs):

print(f"받은 인자들: {kwargs}") # 딕셔너리로 받음

for key, value in kwargs.items():

print(f"{key}: {value}")

print_info(name='Alice', age=30, city='Seoul')

# 받은 인자들: {'name': 'Alice', 'age': 30, 'city': 'Seoul'}

# name: Alice

# age: 30

# city: Seoul

# 둘 다 사용

def flexible_function(required, *args, **kwargs):

print(f"필수 인자: {required}")

print(f"추가 위치 인자: {args}")

print(f"추가 키워드 인자: {kwargs}")

flexible_function(1, 2, 3, 4, x=10, y=20)

# 필수 인자: 1

# 추가 위치 인자: (2, 3, 4)

# 추가 키워드 인자: {'x': 10, 'y': 20}# * : 나머지 요소들 모으기

a, *b, c = [1, 2, 3, 4, 5]

print(a) # 1

print(b) # [2, 3, 4]

print(c) # 5

first, *middle, last = ['a', 'b', 'c', 'd', 'e']

print(first) # 'a'

print(middle) # ['b', 'c', 'd']

print(last) # 'e'

# ** : 딕셔너리에서는 이런 식으로 사용 불가

# 딕셔너리는 다른 방식으로 분해

person = {'name': 'Alice', 'age': 30, 'city': 'Seoul'}

name = person['name']

rest = {k: v for k, v in person.items() if k != 'name'}

print(name) # 'Alice'

print(rest) # {'age': 30, 'city': 'Seoul'}Tuples can be easily converted to lists with the list function, which takes any sequence as an argument and produces a new list with the same elements as the original sequence. Similarly, lists can be converted to tuples with the tuple function, which does the same thing but produces a new tuple instead of a new list:

As an interesting side note, list is a convenient way to break a string into characters:

This technique works because list (and tuple) apply to any Python sequence, and a string is just a sequence of characters. (Strings are discussed fully in chapter 6.)

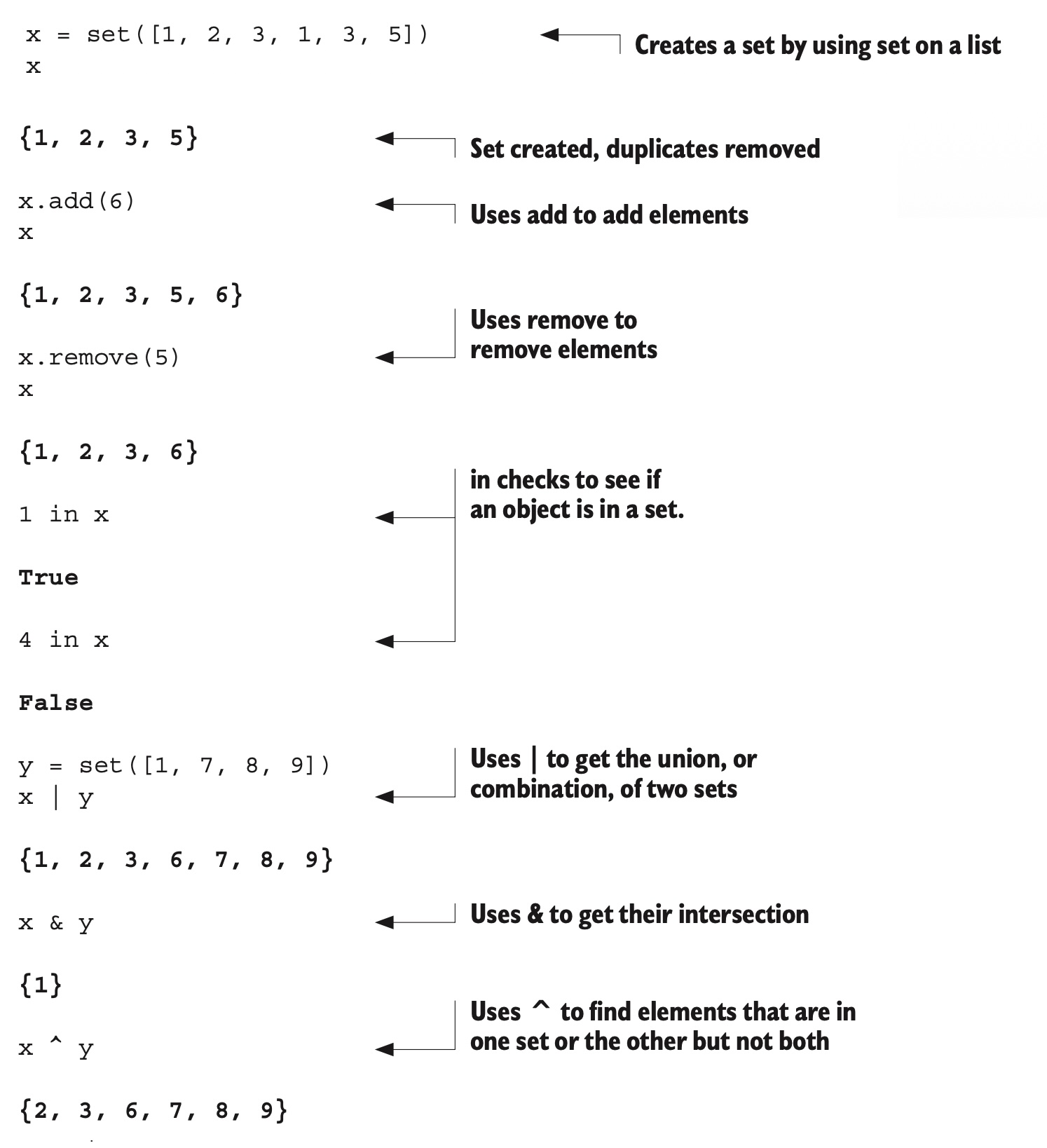

A set in Python is an unordered collection of objects used when membership and uniqueness in the set are the main things you need to know about that object. Like dictionary keys (discussed in chapter 7), the items in a set must be immutable and hashable. This means that ints, floats, strings, and tuples can be members of a set, but lists, dictionaries, and sets themselves can’t.

In addition to the operations that apply to collections in general, such as in, len, and iteration in for loops, sets have several set-specific operations:

You can create a set by using set on a sequence, such as a list. When a sequence is made into a set, duplicates are removed. After creating a set by using the set function, you can use add and remove to change the elements in the set. The in keyword is used to check for membership of an object in a set. You can also use | to get the union, or combination, of two sets, & to get their intersection, and ^ to find their symmetric difference—that is, elements that are in one set or the other but not both.

These examples aren’t a complete listing of set operations, but they are enough to give you a good idea of how sets work. For more information, refer to the official Python documentation.

Because sets aren’t immutable and hashable, they can’t belong to other sets. To remedy that situation, Python has another set type, frozenset, which is just like a set but can’t be changed after creation. Because frozen sets are immutable and hashable, they can be members of other sets:

x = set([1, 2, 3, 1, 3, 5])

z = frozenset(x)

z

#> frozenset({1, 2, 3, 5})

z.add(6)

#> ---------------------------------------------------------------------------

#> AttributeError Traceback (most recent call last)

#> <ipython-input-4-60917eecf5a3> in <cell line: 1>()

#> ----> 1 z.add(6)

#> AttributeError: 'frozenset' object has no attribute 'add'

x.add(z)

x

#> {1, 2, 3, 5, frozenset({1, 2, 3, 5})}If you were to construct a set from the following list, how many elements would the set have? [1, 2, 5, 1, 0, 2, 3, 1, 1, (1, 2, 3)]

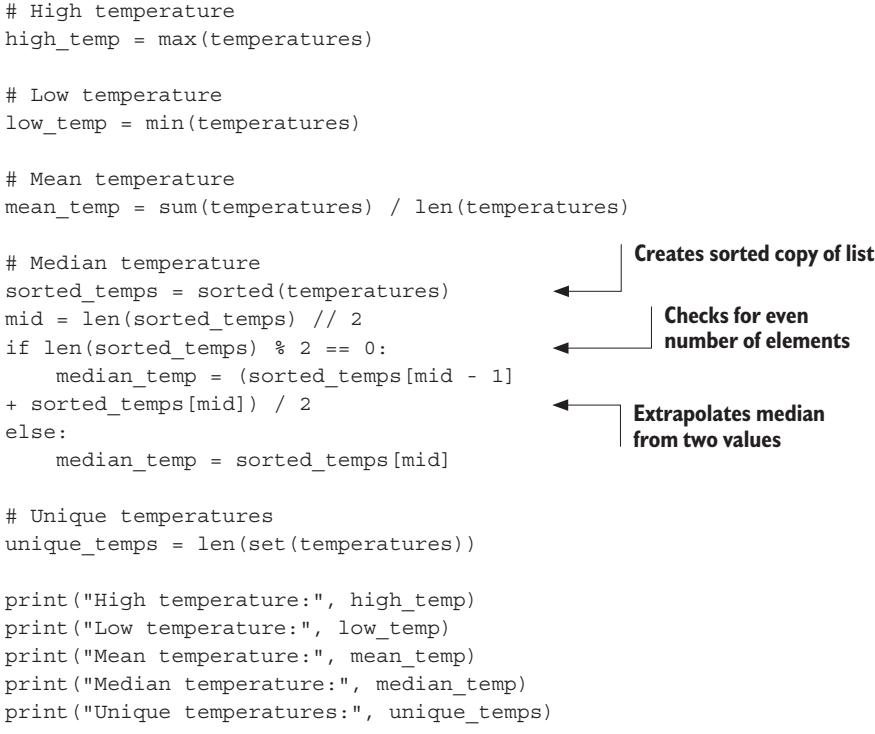

In this lab, the task is to read a set of temperature data (the monthly high temperatures at Heathrow Airport for 1948 through 2016) from a file and then find some basic information: the highest and lowest temperatures, the mean (average) temperature, and the median temperature (the temperature in the middle if all the temperatures are sorted).

The temperature data is in the file lab_05.txt in the source code directory for this chapter. Because I haven’t yet discussed reading files, here’s the code to read the files into a list:

temperatures = []

with open('lab_05.txt') as infile:

for row in infile:

temperatures.append(float(row.strip()))You should find the highest and lowest temperature, the average, and the median. You’ll probably want to use the min(), max(), sum(), len(), and sort() functions/ methods. As a bonus, determine how many unique temperatures are in the list.

You should have a try at creating a solution to this problem using your knowledge and the material presented in this chapter. The preceding code will open a file and read its contents in as a list of floats. You can then use the functions mentioned to get the answers required. And for a bonus, the key is to think of how to convert a list so that only unique values remain.

You may be thinking, “Why should I write this code? Can’t I use AI to generate a solution?” And the answer is yes, you can, but trying to create a solution on your own first helps you both understand the problem better and learn how Python works. Both of those are vital for using an AI code generator—you need a solid understanding of the problem to create an effective prompt for the AI, and a solid understanding of how Python works is essential for evaluating the generated code.

If you are using the Jupyter notebook for this chapter, there is a cell with the code to load the file where you can put in your code to find the mean, median, etc. temperatures. Next, we’ll discuss a sample solution created by a human (me) and compare it to the AI solution.

As mentioned in chapter 2, there are several AI code generation tools, and the landscape is rapidly evolving, so it’s quite possible you will be using something different from the options available as I write this. For the sake of illustration, I will use the code generator available in Google Colaboratory, which is currently available for free, and GitHub Copilot, which is available by subscription with a free trial and runs in Microsoft’s VS Code IDE. I’ll discuss one of the solutions and note if the other system produces something dramatically different.

To generate code in Colaboratory, we need to click the generate link shown in an empty cell, “Start coding or generate with AI.” Once we do that, we get a code generation dialog waiting for a prompt telling the code generator what we want to do. Once we enter the prompt in the field after the Using… button (as shown in the following figure), we can click the Generate button to generate our code.

Creating a prompt is an evolving art, but since the prompt field is limited, we can’t simply copy and paste the whole problem statement. Since we already have the code to read the file into a list as a series of floats, our prompt should focus only on what we need the code to do.

With this guidance, you can go ahead and try creating a prompt and generating some code. Try to evaluate the generated code and, if necessary, refine your prompt.

To create our prompt, we focused on what we needed the code to do. The following is the list of things we needed done:

We might combine this into a prompt as

Using the list temperatures, find the high, low, mean, and median temperatures, and show how many unique temperatures are in the list.

This prompt is complete and concise and covers exactly what we need. Remember that we already had the code to load the file, so we don’t need to ask for that, but of course we do need to add that code to the generated code:

temperatures = []

with open('lab_05.txt') as infile:

for row in infile:

temperatures.append(float(row.strip()))The solution I came up with follows.

max_temp = max(temperatures)

min_temp = min(temperatures)

mean_temp = sum(temperatures)/len(temperatures)

# we'll need to sort to get the median temp

temperatures.sort() # <-- Sorts the temperatures list in place

median_temp = temperatures[len(temperatures)//2] # <-- Gets value from midpoint of list or one above midpoint if an even number of elements

print(f"max = {max_temp}")

print(f"min = {min_temp}")

print(f"mean = {mean_temp}")

print(f"median = {median_temp}")

# Bonus

unique_temps = len(set(temperatures))

print(f"number of uniquie temps - {unique_temps}")This solution does the job and reflects my bias to keep things simple and to keep comments to a minimum.

The code generated by AI is remarkably similar—probably because the problem is not complex. Using Copilot (the Colaboratory code was nearly identical) we get

This code also does the job, with what is arguably nicer formatting and more comments.

The AI version uses more whitespace, which makes the code easier to read. It also uses more comments—to my mind maybe more than needed. It’s good style in Python to use comments only where needed to explain why something is as it is, and in my opinion a comment # High temperature right before the high_temp is not needed. On the other hand, the comment in the human version, # we’ll need to sort to get the median temp, explains why we’re sorting, which is lacking in the AI version. But you could argue that it’s a question of preferences.

Besides some difference in printing the results, the main difference between the two is in finding the median. Again, the median is the value exactly in the middle of a series of sorted values, and the human version sorts the list in place and just picks the value in slot len(temperatures) // 2. The AI version makes a sorted copy of the list using the sorted function, and then, if the list has an even number of elements, meaning that there is no exact middle of the list, it extrapolates a value.

You might think that the AI version is superior: it has more code, and it preserves the original list and takes a more sophisticated view of the median. In real-world programming, however, things are not so clear. If the list of temperatures were several million lines long, then creating a sorted copy may not be an efficient use of memory, and it may well be that there is no need to preserve a version in the original order. It may also be the case that we don’t want an extrapolated median but an actual value in the list, or that we don’t care if the median value is actually from a slot one higher than the midpoint of the list. In that case, the simpler code would be preferable.

The main takeaway from this example is that while AI tools can generate working code that looks nice, it is important to keep in mind when analyzing the code both the problem to be solved and how the code functions to solve it.

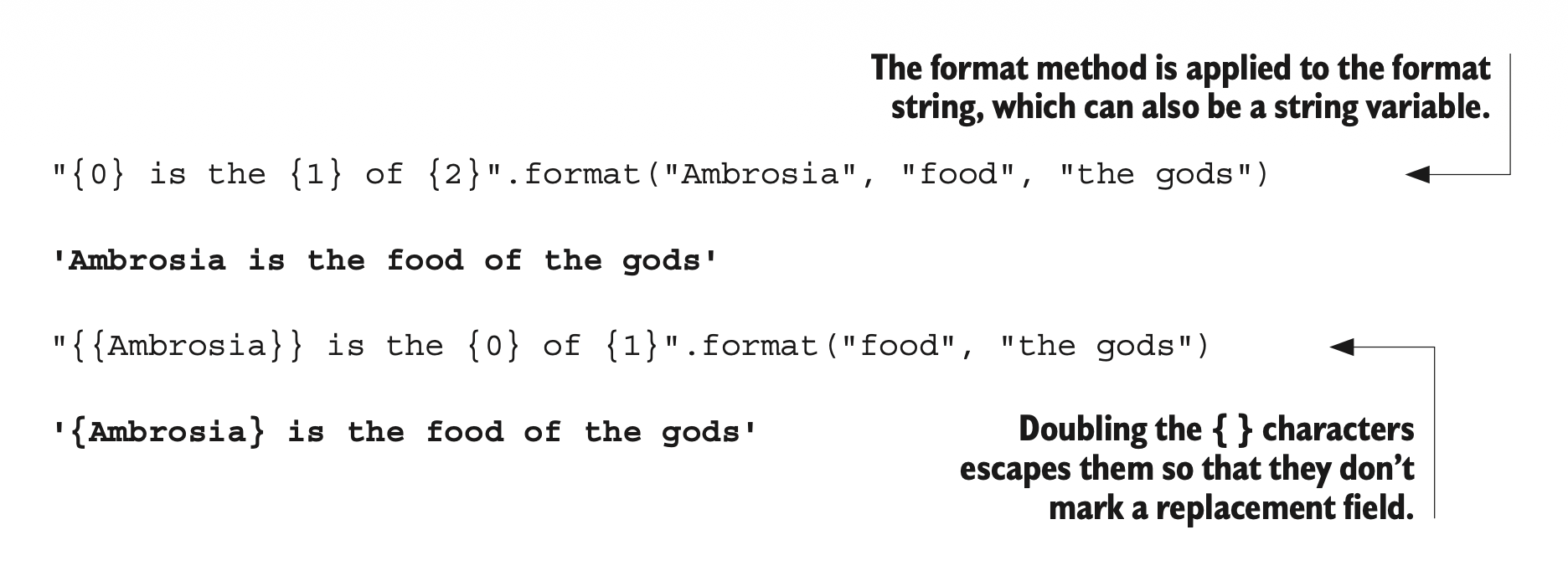

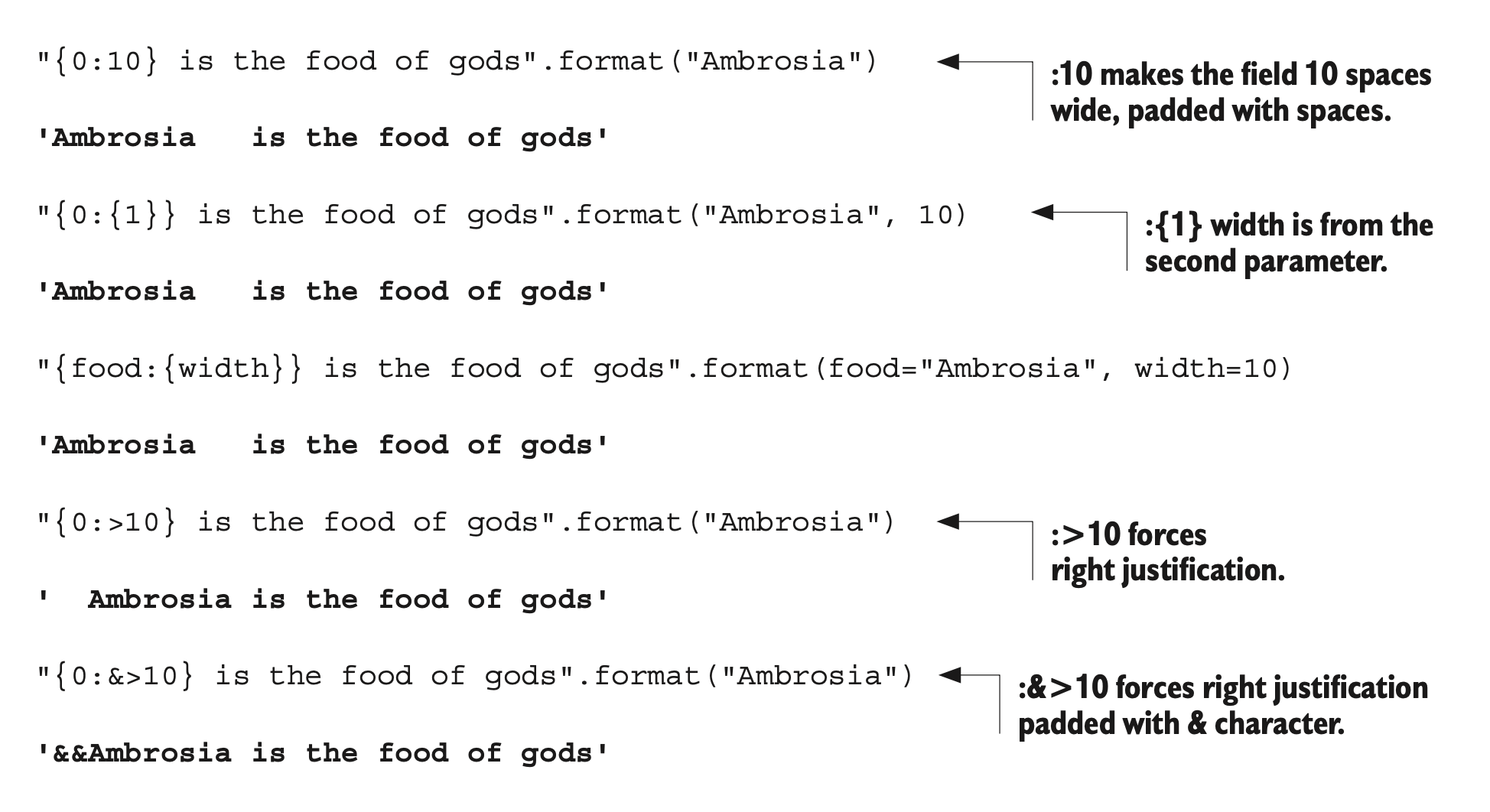

Handling text—from user input to filenames to chunks of text to be processed—is a common chore in programming. Python comes with powerful tools to handle and format text. This chapter discusses the standard string and string-related operations in Python.

For the purposes of extracting characters and substrings, strings can be considered to contain sequences of characters, which means that you can use index or slice notation:

One use for slice notation with strings is to chop the newline off the end of a string (usually, a line that’s just been read from a file):

This code is just an example. You should know that Python strings have other, better methods to strip unwanted characters, but this example illustrates the usefulness of slicing.

It’s also worth noting that there is no separate character type in Python. Whether you use an index, slicing, or some other method, when you extract a single “character” from a Python string, it’s still a one-character string, with the same methods and behavior as the original string. The same is true for the empty string ““.

You can also determine how many characters are in the string by using the len function, which is used to find the number of elements in a list:

But strings aren’t lists of characters. The most noticeable difference between strings and lists is that, unlike lists, strings can’t be modified. Attempting to say something like string.append(‘c’) or string[0] = ‘H’ results in an error. You’ll notice in the previous example that I stripped off the newline from the string by creating a string that was a slice of the previous one, not by modifying the previous string directly. This is a basic Python restriction, imposed for efficiency reasons.

The simplest (and probably most common) way to combine Python strings is to use the string concatenation operator +:

Python also has an analogous string multiplication operator that I’ve found to be useful sometimes but not often:

You’ve already seen a few of the character sequences that Python regards as special when used within strings: represents the newline character, and epresents the tab character. Sequences of characters that start with a backslash and that are used to represent other characters are called escape sequences. Escape sequences are generally used to represent special characters—that is, characters (such as tab and newline) that don’t have a standard one-character printable representation. This section covers escape sequences, special characters, and related topics in more detail.

Python provides a brief list of two-character escape sequences to use in strings (see table 6.1). The same sequences also apply to bytes objects, which will be introduced at the end of this chapter.

| Escape sequence | Character represented |

|---|---|

\' |

Single-quote character |

\" |

Double-quote character |

\\ |

Backslash character |

\a |

Bell character |

\b |

Backspace character |

\f |

Form-feed character |

\n |

Newline character |

\r |

Carriage-return character (not the same as \n) |

\t |

Tab character |

\v |

Vertical tab character |

\r 예시

The ASCII character set defines quite a few more special characters. These characters are accessed by the numeric escape sequences, described in the next section.

You can include any ASCII character in a string by using an octal (base 8) or hexadecimal (base 16) escape sequence corresponding to that character. An octal escape sequence is a backslash followed by three digits defining an octal number; the ASCII character corresponding to this octal number is substituted for the octal escape sequence. A hexadecimal escape sequence begins with \x rather than just \ and can consist of any number of hexadecimal digits. The escape sequence is terminated when a character is found that’s not a hexadecimal digit. For example, in the ASCII character table, the character m happens to have decimal value 109. The value of decimal 109 is octal value 155 and hexadecimal value 6D, so

All three expressions represent a string containing the single character m. But these forms can also be used to represent characters that have no printable representation. The newline character , for example, has octal value 012 and hexadecimal value 0A:

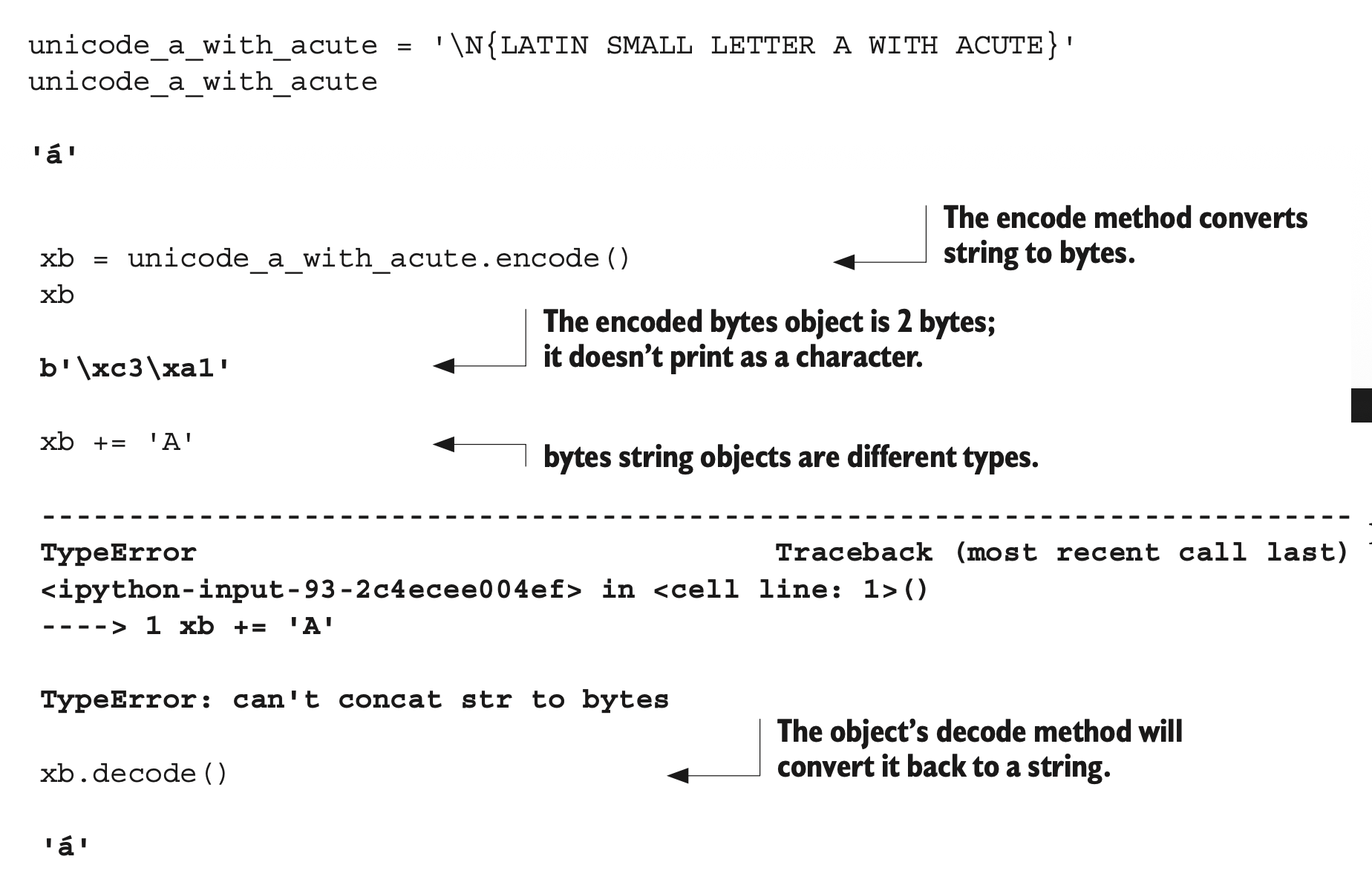

Because all strings in Python 3 are Unicode strings, they can also contain almost every character from every language available. Although a discussion of the Unicode system is far beyond the scope of this book, the following examples illustrate that you can also escape any Unicode character, either by number (as shown earlier) or by Unicode name:

unicode_a ='\N{LATIN SMALL LETTER A}' # <-- Escapes by Unicode name

unicode_a

#> 'a' # <-- ASCII characters are Unicode characters.

unicode_a_with_acute = '\N{LATIN SMALL LETTER A WITH ACUTE}'

unicode_a_with_acute

#> 'á'

"\u00E1" # <-- Escapes by number, using \u

#> 'á'This code shows how you can access characters, including common ASCII characters, by using their Unicode names with \N(Unicode name) or by using their number with \u.

Unicode 코드 포인트 표기

| 문자 | 10진수 | 16진수 | Unicode | Bytes (UTF-8) (16진수) |

|---|---|---|---|---|

A |

65 | 0041 | U+0041 | 41 |

á |

225 | 00E1 | U+00E1 | C3 A1 |

가 |

44032 | AC00 | U+AC00 | EA B0 80 |

中 |

20013 | 4E2D | U+4E2D | E4 B8 AD |

🎉 |

127881 | 1F389 | U+0001F389 | F0 9F 8E 89 |

I talked earlier about the difference between evaluating a Python expression interactively and printing the result of the same expression by using the print function. Although the same string is involved, the two operations can produce screen outputs that look different. A string that’s evaluated at the top level of an interactive Python session is shown with all of its special characters as octal escape sequences, which makes clear what’s in the string. Meanwhile, the print function passes the string directly to the terminal program, which may interpret special characters in special ways. The following is what happens with a string consisting of an a followed by a newline, a tab, and a b:

In the first case, the newline and tab are shown explicitly in the string; in the second, they’re used as newline and tab characters.

A normal print function also adds a newline to the end of the string. Sometimes (that is, when you have lines from files that already end with newlines), you may not want this behavior. Giving the print function an end parameter of “” causes the print function to suppress the final newline:

Most of the Python string methods are built into the standard Python string class, so all string objects have them automatically. The standard string module also contains some useful constants. Modules are discussed in detail in chapter 10.

For the purposes of this section, you need only remember that most string methods are attached to the string object they operate on by a dot (.), as in x.upper(). That is, they’re prepended with the string object followed by a dot. Because strings are immutable, the string methods are used only to obtain their return value and don’t modify the string object they’re attached to in any way.

I begin with those string operations that are the most useful and most commonly used; then I discuss some less commonly used but still useful operations. At the end of this section, I discuss a few miscellaneous points related to strings. Not all the string methods are documented here. See the documentation for a complete list of string methods.

Anyone who works with strings is almost certain to find the split and join methods invaluable. They’re the inverse of one another: split returns a list of substrings in the string, and join takes a list of strings and puts them together to form a single string with the original string between each element. Typically, split uses whitespace as the delimiter of the strings it’s splitting, but you can change that behavior via an optional argument.

String concatenation using + is useful but not efficient for joining large numbers of strings into a single string, because each time + is applied, a new string object is created. The previous Hello, World example produces three string objects, two of which are immediately discarded. A better option is to use the join function, which creates only one new string object:

By changing the string used to join, you can put anything you want between the joined strings:

You can even use an empty string, ““, to join elements in a list:

The most common use of split is probably as a simple parsing mechanism for string-delimited records stored in text files. By default, split splits on any whitespace, not just a single space character, but you can also tell it to split on a particular sequence by passing it an optional argument:

x = "You\t\t can have tabs\t\n \t and newlines \n\n " \

"mixed in"

x.split()

#> ['You', 'can', 'have', 'tabs', 'and', 'newlines', 'mixed', 'in']

x = "Mississippi"

x.split("ss")

#> ['Mi', 'i', 'ippi']Sometimes it’s useful to permit the last field in a joined string to contain arbitrary text, perhaps including substrings that may match what split splits on when reading in that data. You can do this by specifying how many splits split should perform when it’s generating its result, via an optional second argument. If you specify n splits, split goes along the input string until it has performed n splits (generating a list with n + 1 substrings as elements) or until it runs out of string. The following are some examples:

x = 'a b c d'

x.split(' ', 1)

#> ['a', 'b c d']

x.split(' ', 2)

#> ['a', 'b', 'c d']

x.split(' ', 9)

#> ['a', 'b', 'c', 'd']When using split with its optional second argument, you must supply a first argument. To get it to split on runs of whitespace while using the second argument, use None as the first argument.

# 로그 형식: "날짜 시간 레벨 메시지"

log_line = "2023-12-01 10:30:45 ERROR Failed to connect to server: Connection refused on port 8080"

# 문제가 되는 방법 - 모든 공백으로 분할

parts = log_line.split()

print(parts)

# ['2023-12-01', '10:30:45', 'ERROR', 'Failed', 'to', 'connect', 'to', 'server:', 'Connection', 'refused', 'on', 'port', '8080']

# 메시지가 여러 조각으로 나뉘어짐!

# 해결책 - maxsplit 사용

parts = log_line.split(None, 3) # 최대 3번만 분할

print(parts)

# ['2023-12-01', '10:30:45', 'ERROR', 'Failed to connect to server: Connection refused on port 8080']

date, time, level, message = parts

print(f"날짜: {date}")

print(f"시간: {time}")

print(f"레벨: {level}")

print(f"메시지: {message}") # 전체 메시지가 보존됨I use split and join extensively, usually when working with text files generated by other programs. If you want to create more standard output files from your programs, good choices are the csv and json modules in the Python standard library.

# 1. 공백 정규화

messy_text = "Hello world\t\tthis is\n\n messy"

clean_text = ' '.join(messy_text.split())

print(clean_text) # "Hello world this is messy"

# 2. 경로 처리

unix_path = "/home/user/documents/file.txt"

windows_path = '\\'.join(unix_path.split('/'))

print(windows_path) # "\home\user\documents\file.txt"

# 3. 데이터 변환

pipe_delimited = "apple|banana|cherry|date"

comma_delimited = ','.join(pipe_delimited.split('|'))

print(comma_delimited) # "apple,banana,cherry,date"

# 4. 텍스트 필터링

text_with_bad_words = "This is a good sentence. This is bad. This is okay."

sentences = text_with_bad_words.split('. ')

filtered = '. '.join([s for s in sentences if 'bad' not in s])

print(filtered) # "This is a good sentence. This is okay."Prompt: csv, json을 포함해 현대적인 데이터 파일 형식에는 어떤 것이 있으며 그 특징을 예시와 함께 설명해주세요.

How could you use split and join to change all the whitespace in string x to dashes, such as changing “this is a test” to “this-is-a-test”?

You can use the functions int and float to convert strings to integer or floating-point numbers, respectively. If they’re passed a string that can’t be interpreted as a number of the given type, these functions raise a ValueError exception. Exceptions are explained in chapter 14.

In addition, you may pass int an optional second argument, specifying the numeric base to use when interpreting the input string:

float('123.456')

#> 123.456

float('xxyy')

#> ---------------------------------------------------------------------------

#> ValueError Traceback (most recent call last)

#> <ipython-input-30-14b25c5b2052> in <cell line: 1>()

#> ----> 1 float('xxyy')

#> ValueError: could not convert string to float: 'xxyy'

int('3333')